A couple of weeks ago I chaired a Global CIO Institute conference, hosting a dinner, various talks, and round table discussions with CIOs.

What has struck me during all these interactions was the marked contrast between these CIOs at the coalface and the topics obsessed upon by LinkedIn/academic/journalistic style discussions. While CIOs are interested in topics like digital transformation, AI, robotics, data-lakes and lakehouses, the API-economy and the rise of ChatGPT (the usual LinkedIn fare) these were not what drove them. Their interest was much more on safely driving consequential innovation within their company’s line of business.

Of significant interest within this was the need to manage various forms of risk. Risk was not to be “avoided” – or as Robin Smith (CISO) at Aston Martin put it, we need to promote “positive risk taking” for innovation. All intervention generated risk. For some this manifested as needing guard-rails around IT innovation so creative and innovative staff were not constrained by the risk of a catastrophic failure. This was particularly true as low-code and citizen development expands. For CIOs, developing a culture of innovation demanded systems that allowed innovations to fail safely and elegantly.

Risk-taking behaviour within innovation was only one risk they face. Sobering conversations concerned external sources of risk and the need for business resilience in the face of pandemic, war, and cyber-security challenges. Any innovation in digital technology increases the potential surface-area that companies can be attacked through. This demands ever more sophisticated (and expensive) technical countermeasures but also cultural changes. While attention is driven towards the use of AI (like ChatGPT) for good, nefarious actors are thinking about how such tools might be used for ill. For example, attackers can use emails, telephone calls, and deep-fake video calls to sound, and even look, like a company’s CEO or top customer asking for help[1]. How can CIOs ensure their staff do not fall foul of these and various more technical scams? How can trust be established if identity is hard to prove? What happens when AI is applied to exploring possible attacks through Public APIs?

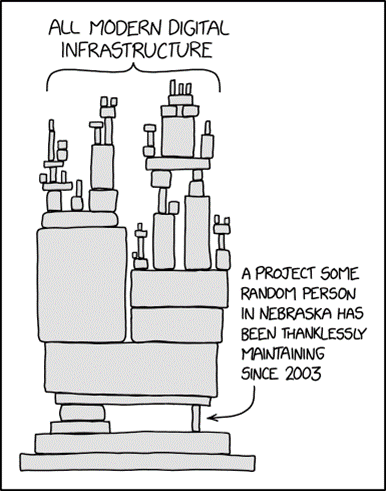

Also of significant concern was keeping-the-lights-on with their ever more demanding and heterogenous estate of products, platforms and systems. One speaker pointed out the following XKCD cartoon which captures this so well. The law of unintended consequences dominated many of their fears, particularly as organisations moved towards exploiting such new-technologies in various forms.

Source/: https://xkcd.com/2347/ (cc) XKCD with thanks).

What was clear, and remains clear, is that we need to have a view of the enterprise technology landscape that balances risk and reward. While commentators ignore the complexity of legacy infrastructure, burgeoning bloated cloud computing estates, and the risks involved in adding more complexity to these, those tasked with managing the enterprise IT estate cannot.

These thoughts are obviously not scientific and are entirely anecdotal. The CIOs I met were often selected to attend, the conversations were steered by agenda etc. But they did remind me why CIOs are not as obsessed with ChatGPT as everyone might think.

[1] An executive from OKTA gave the example of this for Binance exec says scammers made a deep fake hologram of him • The Register

Header Image “Business Idea” by danielfoster437 is licensed under CC BY-NC-SA 2.0.